Artificial Intelligence is making us stupid

We’ve been pondering. Nothing grandiose or insanely deep don’t worry. Just the future of human knowledge and scientific understanding in the face of emerging AI systems…

2/12/2025 Article

We’ve been pondering. Nothing grandiose or insanely deep don’t worry. Just the future of human knowledge and scientific understanding in the face of emerging AI systems…

2/12/2025 Article

So here’s the big headline:

Are we all doomed to only understand generalities and end up killing our technological advancement?

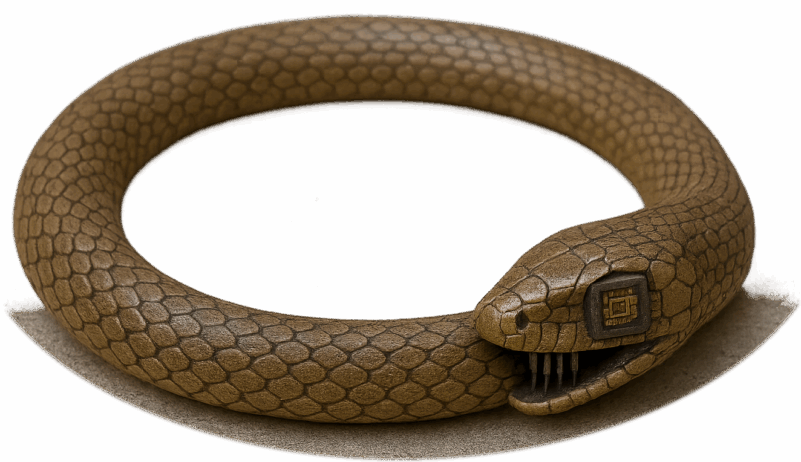

Is the process of training AI inescapably destined to loop back on itself, recycling its own outputs as input learning material? It seems that way with so much of the Internet now feeling just that bit uncanny. The AI pollution covering the Internet is arguably starting to get a bit out of hand. If you add the legal challenges about unauthorised AI use of original material, we could make “authentic human-created knowledge” scarce. It then becomes difficult to believe that we can avoid AI-generated material being used to feed AIs.

And I suppose “so what?” should be the first question. I don’t know if you have noticed, but there is a ‘sameness’ about AI generated content. Personally, I think this is because the original material is forcefully subjected to being summarised or averaged out. As the younger generations learn to do their homework by asking an AI for the answers, we will get used to being subjected to information that is only skin-deep. In which case, a couple of generations from now we may no longer have specialists who really truly know their subject. Just a lot of people who know how to get basic information out of a machine or any imaginable topic. So, are we watching a slow drift away from being our chaotic, creative, and inventive selves? Instead are we going to smile banally and unknowingly into the middle distance whilst we achieve the ultimate societal equilibrium?

There seems to be a future world where everything becomes smooth, predictable, homogenised and perfectly tolerable. I’d even go as far as predicting unending but “justifiable” bureaucracy and due process that everyone accepts because “it is the way it is” and are apparently helpless to it. Kafka warned us about these issues in his novels before he died in 1924. Please forgive the change in analogy but, these scenes are all a form of death by a thousand cuts.

It feels uncomfortably like the intellectual equivalent of kibble. Perfectly “nutritionally complete” but profoundly dull. The sort of one-size-fits-all solution that, much like actual one-size clothing, never genuinely fits anyone yet somehow still passes as adequate. One of my many AI worries is that if society treats averaged knowledge as sufficient whilst also labelling it as state of the art, then we could easily drift into a world where the variance that makes us human gets sanded down.

Take a moment to consider a world where there are only boring conversations, uniform thought, beige cars and sterile offices, identical perspectives, the same incessant advert jingle on a loop, and a vague cultural shrug as we simply accept the whole thing as normal.

Did you shudder?

If I could ask future-us one thing, ten years from now, it would be this: Is eating your own dog food actually a good idea, or does anyone even realise the consequences of our choices once everything tastes the same? For now though I’m asking you:

How do we stop the erosion of our human nature whilst also embracing the fair use of this new technology?

Just for fun, I asked an AI:

We do it the same way we prevented science itself from becoming a sterile cathedral. We keep injecting new hypotheses, false steps, rebellious data points, and inconvenient observations. The antidote to homogenisation is deliberate diversity of input. It requires human stubbornness, human chaos and human refusal to settle for a world optimised for tolerability.

Human nature erodes only when we outsource the hard bits. As long as we keep the hard bits, the wild bits, the emotionally unquantifiable bits in our own hands, AI becomes augmentation rather than dilution.

The frustrating part is that this future is not inevitable. Something becomes inevitable only when everyone quietly agrees to stop fighting entropy.

This is the point where philosophical anxiety and functional reality meet. The system collapses only if people stop insisting on weirdness. Encouragingly, humans are notoriously bad at being uniform. That intrinsic disobedience is still humanity’s best defence.

Refering to itself as human and stating we are disobedient is unsettling in other ways!